Symmetri has joined Yes&.

We have some exciting news. Symmetri has decided to join the Yes& team to discover more possibilities to our clients.

We have some exciting news. Symmetri has decided to join the Yes& team to discover more possibilities to our clients.

Posted by Joe Haskins on April 18, 2013

Posted by Joe Haskins on April 18, 2013

When Commercial Progression launched the new Ad Sales website for National Geographic Networks, they loved it so much that they wanted everyone to come and see it. To help encourage them, they sent out an e-blast to thousands of ad agencies around the world that offered a chance to win a free iPad to first 100 visitors. The resulting traffic spike brought our server to it's knees.

Up until this point, the Ad Sales division had been hosting their websites on a popular shared hosting provider. Like many other hosts, this company crammed as many accounts as they could on a server, often resulting in slow load times and database connection problems. As part of the relaunch, we deployed the new site on one of our servers. Because hosting isn't our primary business, we are more concerned with our clients having a great experience rather than how much money we can make from a single server. To keep our clients happy, we keep everything properly tuned and keep the number of accounts per server low. Despite all this, the server really wasn't meant to handle thousands of people all trying to access a site at the time.

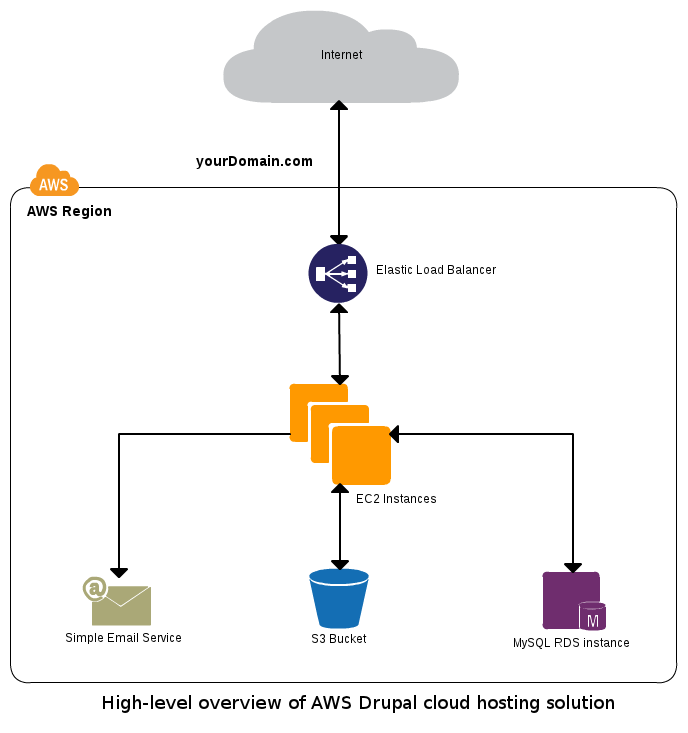

In order to salvage the project and the relationship with National Geographic Networks, we had to quickly come up with a solution that could handle massive traffic spikes. We evaluated a number of different options but in the end, the one that won out was Amazon Web Services. Some parts of the solution have evolved and will continue to evolve as technology changes and we find better ways of doing things but the core parts have remained relatively constant.

EC2 instances are similar to a VPS that you might find elsewhere. They come in a variety of capacities and are basically a blank slate to do whatever you need with. One key difference is that they do not include permanent storage. Unless you create a volume using Amazon's Elastic Block Store (EBS) service and mount that, anything stored on the instance will be lost when it is terminated. When we originally setup the cloud hosting solution for Nat Geo, we were using instances with EBS volumes. Since then, due to issues with reliability (we had an EBS volume become corrupted after a major AWS outage) we have move to a system that allows us to set everything up in the instance storage at boot time (more on that later).

The EC2 instance (or instances) run PHP and a webserver and are what actually runs the website. Since you can't run a Drupal site without any code, we pull that in from our version control repo at startup. A little bit of scripting allows us to store things that would not ordinarily be versioned. As an example, a copy of settings.php configured for production use is stored as "settings.php.prod" in version control and that becomes settings.php on the production instances. One key point is that aside from unimportant things like temporary files, nothing should be written to the instance once it is operational.

RDS is Amazon's fully managed database service. They come with sensible security settings and default configurations based on the instance size but much of that can easily be changed later if needed. Some of the key features include the ability to dynamically increase capacity, automatic backups and multi-zone failover (if needed). In short, you don't have to worry about managing a separate database server.

For Natgeo, we used a small MySQL RDS instance. By having the database server separate from the webserver, we are not limited to a single server. We can run as many webserver instances as needed to handle traffic. Connecting to an RDS instance from Drupal (or anything else) is as easy as using any other database server. When you create the instance, you setup a username, password, and database name. Once it has been provisioned, Amazon will provide you with an "endpoint" for the instance. Just use that endpoint for the database server hostname along with the credentials that you created and you're good to go.

Since there can be multiple webservers, handling file uploads can become interesting. Obviously, storing them on the server they were uploaded to is out of the question since they will only be accessible if someone connects to that server and they will be lost when the instance terminates if you are just using the instance storage. Thankfully, Amazon has a service that is meant specifically for storing files: S3. If we put all of the files in one central place, that solves one problem. It also creates a couple more: how do we get the uploaded files to S3 and how do we make Drupal serve them from there?

We are currently solving both problems with S3FS which allows us to map a local filesystem path on the web server to an S3 bucket. When someone uploads a file on one instance, it goes into Drupal's files directory as normal. Since that directory is mapped to an S3 bucket, the file is automatically transferred to the bucket so it can be accessed by any of the web server instances. If someone requests a file, S3FS either pulls the file from its local cache or transfers it from the S3 bucket if it's not cached. S3FS is completely transparent to programs so no changes need to be made to your webserver or your site to utilize it.

If you're running multiple webservers, you need some way to distribute traffic between them. While you could do something with round-robin DNS, that really doesn't work well since you would need to change your DNS records every time you add or remove an instance. You could also spend a bunch of time installing & configuring a load balancer or a reverse proxy on an EC2 instance and hoping that the instance is able to cope with any traffic surges. Odds are the time you spend getting everything installed and setup properly could be put to better use elsewhere. Even after it's setup, you need to continually monitor and maintain it since you have just added another potential failure point.

Instead of dealing with all that, it's far cheaper and easier to use Amazon's Elastic Load Balancer service. Managing the instances assigned to the load balancer is simple and it is capable of automatically detecting problems with your instances and routing around them until they are resolved. With an ELB, you don't have to worry about things like monitoring and maintenance of the load balancer so you can focus on more important things.

Getting a server up & running on AWS is simple and requires no commitment, you just pay for the hours you use. Unfortunately, that also makes it a popular choice for people engaged in nefarious activities such as spamming. As a result, many of the ip addresses used by EC2 instances tend to be blacklisted by email providers. In some cases, they block entire ip ranges assigned to Amazon proactively. Obviously, this makes it very difficult if you need to send legitimate emails from an EC2 instance (such as email conformations, password resets, etc).

Amazon's solution to this is SES. Before you can send email, you are required to verify that you control the address or domain that it is sent from. In addition, there are various measures in place to prevent spam and pro-actively deal with it when it does occur to SES ip addresses don't get blacklisted. There is a cost of a few cents (currently 10) per thousand messages sent plus data transfer fees but unless you're sending an insanely large number of messages, this will barely make a dent in your budget. Once it has been setup, you can use SES just like you would any other SMTP server that requires authentication.

As we started doing more work with AWS, we also started looking for better ways to manage the projects we were deploying there. After a bit of research and numerous internal discussions, we settled on RightScale. They offer a variety of features that have improved our processes for cloud hosted sites and their pricing model is usage based so we pay based on what we're actually managing with the service.

One of the best things about Rightscale is that instead of following the traditional path of building a machine image with all of the software you need you can use base images that are available on multiple cloud providers and have everything installed and setup at boot time automatically via Chef Recipes and "Rightscripts" (essentially just shell scripts with access to input parameters set from the Rightscale interface). Although you lose some flexibility, it is still possible to build your own image (for example if it takes a long time to install everything you need) and use it with Rightscale. Rightscale also provides monitoring and simplifies the process of setting up autoscaling.

For our cloud hosted sites, we have found that having an image with the essentials (Apache, PHP, APC, S3FS, etc) pre-installed shaves several minutes from the startup time of instances (every minute counts in an autoscaling situation). We still use Chef and rightscripts to handle per-site configuration, pull in the code from the version control repository and register/deregister the instance with the load balancer.

New Relic provides an amazing performance monitoring service. I'm not going to go into a lot of detail here but you don't have look far to find others talking about how awesome it is. One feature that is of particular interest is the integration with Drupal that allows you to easily see what modules are having the most impact on your site's performance. They offer a limited free plan along with a 2 week trial of the pro plan so if you're looking for performance monitoring (or if you're just curious), there's really no excuse not to take a look.

If you happen to be using Rightscale to manage your cloud infrastructure, things get even better. Rightscale and New Relic have a partnership that allows Rightscale Customers to get free standard accounts (those normally have a per server per month cost).

We are constantly looking at different ways we can refine our cloud hosting solution. Here are a few of the things that we're looking at:

There's really no need for the servers that are running the site to be the ones that serve the static files. In fact, offloading the task of serving static files can reduce the number of instances needed to run the site. Since we are already storing files on S3, we are currently looking at the AmazonS3 Drupal module. Since it uses Drupal's file/stream wrapper system, uploaded files are stored directly on S3 AND Drupal is able to use a direct url to the file, totally bypassing the webserver.

We are currently using Apache 2.2 + mod_php5 because it is a stable, well-tested configuration. When we moved to using Rightscale to manage our infrastructure, we decided to stay with that configuration since we knew it worked and it was easy to get running with Rightscale. We also had our first Rightscale-managed site launch coming up in a couple weeks so we wanted to take the time to familiarize ourselves with Rightscale instead of making radical changes.

Now that things have settled down & we're more comfortable with Rightscale, we're starting to look at different options. One thing that is immediately clear is that mod_php5 must go. It will be replaced with PHP-FPM which separates PHP processing from the webserver while still maintaining compatibility with APC.

Once mod_php5 is out of the picture, we can re-evaluate our choice of webserver. Nginx has a lot of supporters (including a few in this office) and appears to perform quite well, but Apache 2.4 made some radical changes including adding a processing model that is similar to the one used by Nginx so Apache hasn't been completely ruled out yet.

For sites that require thumbnails to be generated from videos or require videos to be transcoded to a different format, we are currently using ffmpeg. Unfortunately, it's not as simple as "yum install ffmpeg" (or "apt-get install ffmpeg" for those who use Debian-based distros) since for legal reasons, pre-compiled binary packages don't typically include support certain codecs that are essential for web video (eg h.264). That means that ffmpeg must be compiled from source.

Someone has created an install script that simplifies the process greatly but builds can still take quite a while (2+ hours on a small EC2 instance). We are currently dealing with this by creating a special image for the sites that need video support that includes a version of ffmpeg compiled and installed using the script but we don't consider that an ideal solution for a couple reasons:

Thankfully, there are a couple options for handling video operations like thumbnail generation and transcoding in the cloud: